Produced: 2018

Time: 16 minutes (1 minute x 16 showpieces)

Material: audio-visual

This work visualizes brain activity of how the image reconstructs and transforms an image through hearing sound.

This work focuses on the relationship of visuals and sound, using the cross modal attention phenomenon to create the audio-visual installation.

The relationship between brain activity, sensibility, sensation, and input image and sound has been a inexhaustible interest with a long history.

The detection and analysis of electroencephalogram or EEG has been attempted in the past for its inexpensive and manageable nature, but as it can only acquire the sum total of the various brain activity happening at a single moment, vague descriptions such as “whether the subject is awake or not” could only be used. Therefore, the use of brain activity analysis alongside art has been conceptualized without any large result for a longtime.

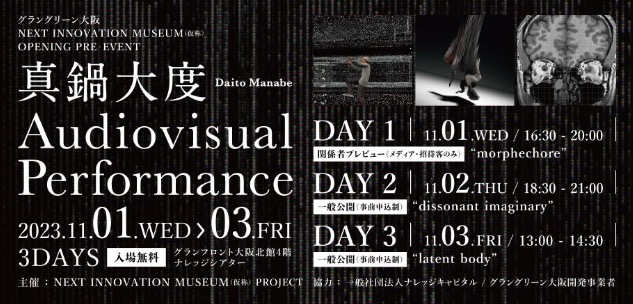

Daito Manabe, who has been creating works through the relation of mathematics and bodily expression, has been previously experimenting with the usage of deep neural networks (DNN) alongside advanced science research such as neural activity.

On the other hand, Kamitani Yukiyasu laboratory at Kyoto University / ATR has been developing technology ahead of the world that analyzes the state of mind through technology called “brain decoding” which utilizes human brain activity pattern measured by functional magnetic resonance imaging (fMRI) alongside pattern recognition by machine learning. In recent years, the usage of large scale databases alongside DNN and “brain decoding” to analyze arbitrary objects perceived from the brain activity pattern has attracted much attention around the world.

Daito Manabe has focused on the research of “brain decoding” early on in 2014 contacting Kamitani initially, to then create a collaborative project based on the cross modal attention phenomenon, which focuses on the relationship of the brain activity, visuals and sound.

This work focuses on the mutual relationship of visual senses and auditory senses, the relationship between sound and image, and the visualization of reconstructed imagery based on the activity of the visual cortex.

Have you ever experienced vivid emotional imagery associated to a soundtrack of a movie or nostalgic music that you heard long ago?

How does music affect the way we experience video and vice versa, how does video affect the way we experience music?

In the future, what kind of form will music and visuals be realized as technology that enables music to be automatically generated from brain activity simply by watching a movie i or visuals being generated by listening to music is realized.

In this work, we speculate on the future of video and sound through the usage of brain decoding.

Kamitani Lab

http://kamitani-lab.ist.i.kyoto-u.ac.jp